Datasets built on top of VQA

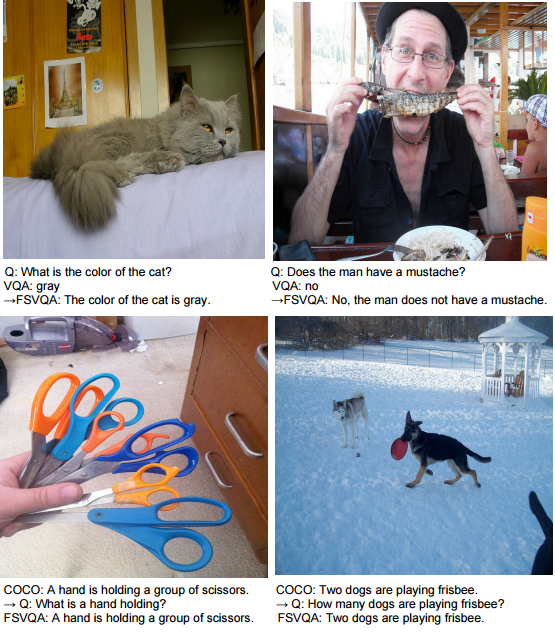

Full-Sentence Visual Question Answering (FSVQA) consists of nearly 1 million pairs of questions and full-sentence answers for images, built by applying a number of rule-based natural language processing techniques to the original VQA dataset and captions in the MS COCO dataset.

The VQA-HAT dataset consists of ~60k attention annotations from humans of where they choose to look while answering questions about images, collected via a game-inspired interface that requires subjects to sharpen regions of a blurred image to answer a question.

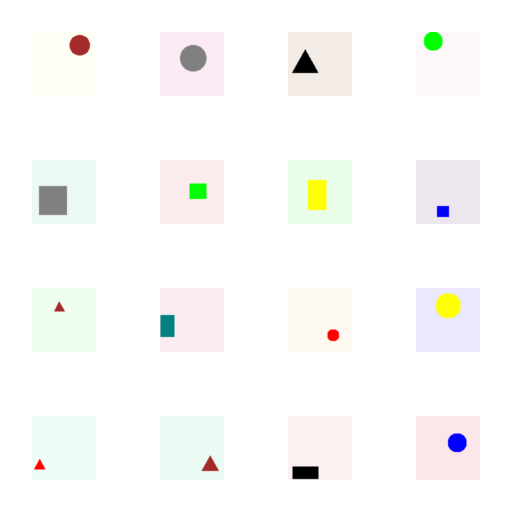

The easy-VQA dataset is a beginner-friendly way to get started — a “Hello World” for VQA. It contains 5k simple, geometric images and 48k questions with only 13 possible answers. Extremely accurate models can be trained on this dataset relatively quickly, even with unimpressive hardware.